The advent of enterprise AI and ML workloads is creating a seismic shift in computing infrastructure. Distributed computing architecture should be driving this innovation, yet 73% of distributed systems fail to scale beyond their initial deployment. Examining how these distributed systems operate at a fundamental level can help better equip teams for their proper execution.

For starters, understanding that AI/ML workloads require 40 times more compute resources than traditional applications helps put the scale of the shift into perspective. Compounding this trend is the reality that AI/ML workloads are becoming increasingly integral to all aspects of business operations.

Distributed systems should be the ideal technological infrastructure upgrade due to their structure. They can enable unprecedented levels of scalability, fault tolerance, and performance optimization when executed correctly. That is why the implementation of distributed architecture is no longer just a niche option; it is a strategic imperative for organizations seeking to leverage their data and computational resources fully.

Distributed Computing Fundamentals

Unlike traditional parallel computing models, which typically involve multiple processors within a single machine, distributed computing involves multiple autonomous computers collaborating to achieve a common computational goal. They usually span across geographical locations, typically operate with unique memories, and have varying degrees of processing capabilities. This global distribution creates a more resilient and flexible infrastructure based on a few key patterns. Primarily:

- Client-server models: Dedicated servers that provide access to resources from requesting clients

- Peer-to-peer networks: Nodes with the functionality of both clients and servers

- Hybrid models: Models that combine the degree of client-server and peer-to-peer components

Within distributed computing applications, there is no one-size-fits-all solution; therefore, understanding which model type offers the best advantages and fewest trade-offs must be evaluated on a case-by-case basis, based on the workload characteristics, scalability requirements, and fault tolerance needs of each project. Fortunately, several free resources, such as the Apache Spark Official Documentation, can provide developers with quick access to overviews that explain how cluster architecture operates.

Similarly, accessing industry reports, such as the Gartner Distributed Hybrid Infrastructure Magic Quadrant Report, can further help developers navigate the balancing of performance, scalability, and operational complexity.

Navigating Distributed System Scalability

Through novel load balancing, fault tolerance, and data partitioning designs, distributed systems can scale easily and adapt to increasing workload demands, outperforming traditional parallel infrastructures by significant margins. Horizontal scale-out models achieve this by adding more machines across distributed networks, reinforcing the overall fault tolerance and improving the cost-effectiveness of the network.

Through effective load-balancing mechanisms, systems can redistribute incoming requests across multiple nodes to prevent any single node from becoming a bottleneck. This enables the network to handle larger volumes and to execute more complex AI and ML workloads more efficiently. Implementing improved fault tolerance and redundancy designs through data replication across multiple nodes allows for systems to continue operating even when an individual component fails. Netflix’s microservices architecture best demonstrates data replication in action. The design allows the platform to maintain service availability even when multiple components are experiencing issues.

Optimizing Performance Data

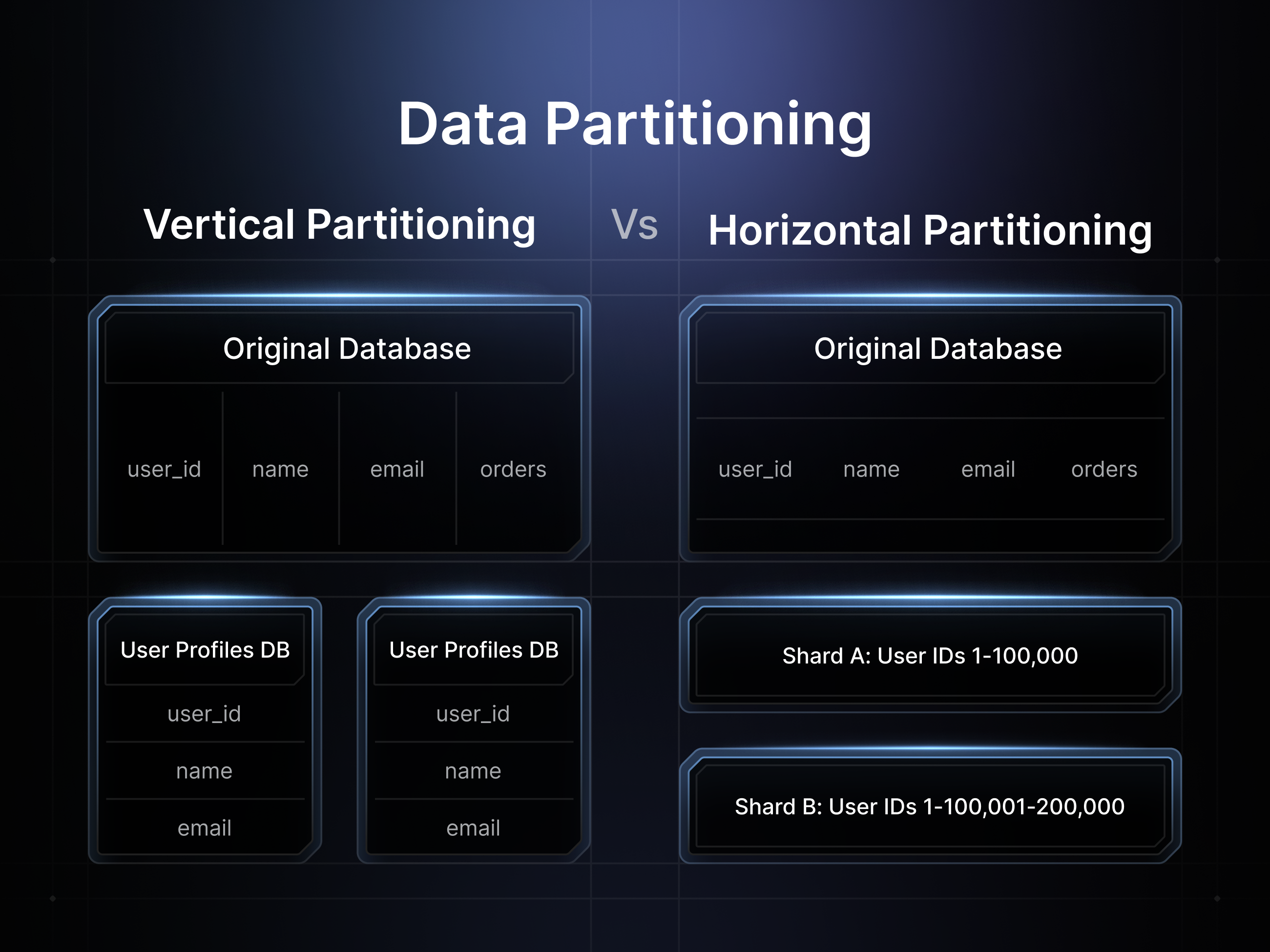

Decisions surrounding data partitioning and how to best optimize model consistency in distributed systems are another critical area to understand when building distributed networks. Horizontal partitioning (sharding) allows for the division of data across nodes based on specific criteria, while vertical partitioning enables the separation of data based on data types and functional domains. Choosing the correct partition option or hybrid model for your system can allow a more efficient distribution of workload across the network, resulting in additional cost savings from reduced overhead.

Accessing the Apache Kafka Official Documentation can provide comprehensive guidance to developers navigating data partitioning. According to the Gartner Market Guide for Distributed Order Management Systems (2024), organizations that implement proper data partitioning strategies typically see an improvement of up to 60% in their overall system’s performance. Therefore, understanding this issue should not be taken lightly.

Choosing an Optimal Consistency Model

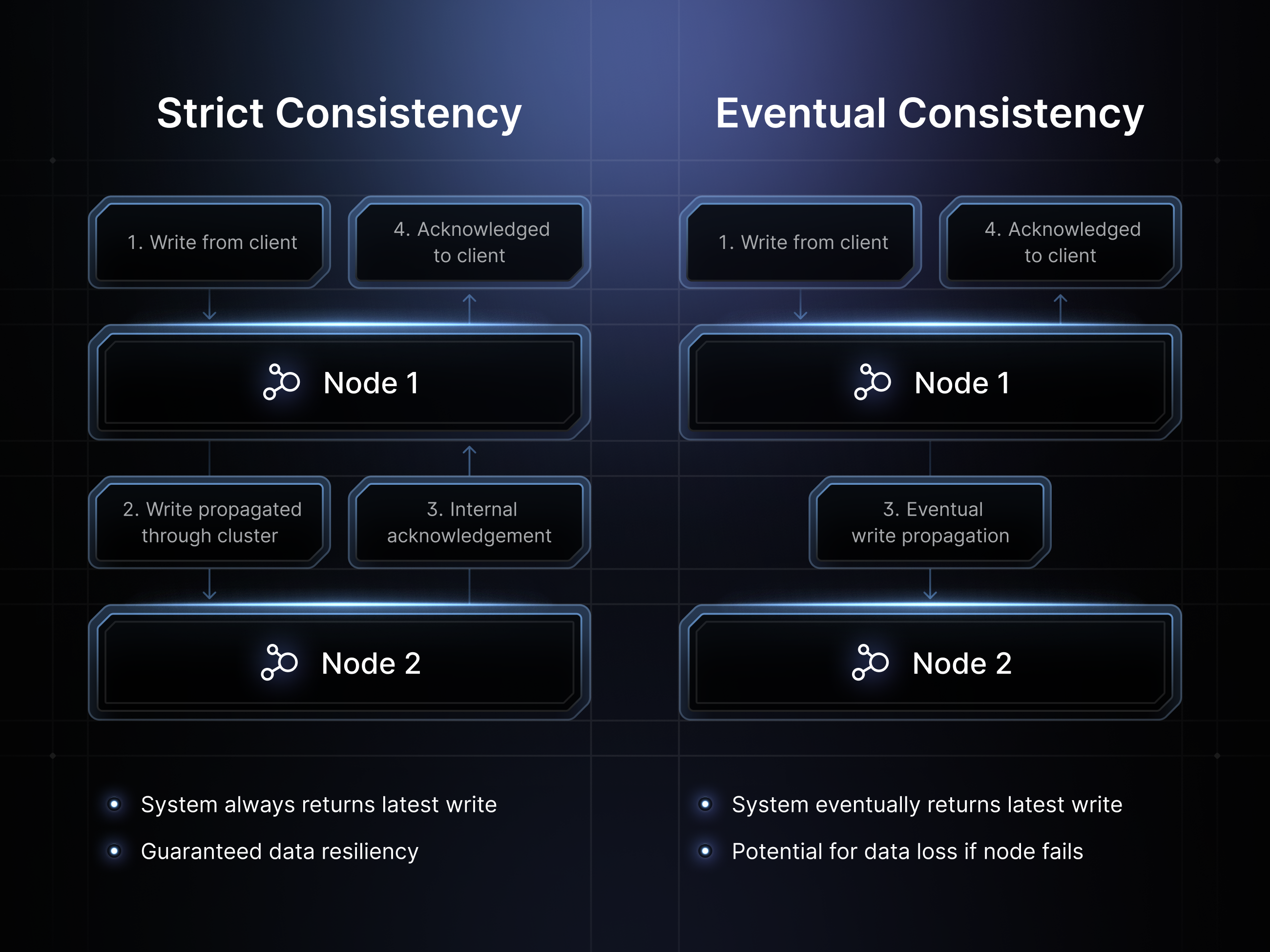

Consistency modeling determines how distributed systems handle data synchronization across their nodes. Similar to architecture patterns, each distributed model must determine which form of consistency modeling is optimal for it, especially when considering the impact it will have on network latency. Strong consistency models can ensure that all nodes have the same data visible simultaneously. This can guarantee data accuracy and simplify application logic, but due to the coordination overhead, it can impact overall performance and lead to higher latency.

By comparison, eventual consistency allows for temporary inconsistencies, provided that all nodes eventually converge in the same state. Eventual consistency enables significantly higher throughput and better fault tolerance but requires applications to implement dynamic conflict resolution strategies. Amazon's DynamoDB exemplifies this model, where a write operation might be visible on some nodes before others, but the system guarantees convergence within a specific timeframe.

Hybrid models, such as causal and session consistency, enable even greater flexibility in optimizing data synchronization in distributed computing systems. Understanding the nuances of these models is crucial for any project seeking to develop its own distributed system, as it can significantly impact the system’s overall performance.

The Apache Spark Cluster Architecture Guide is an excellent resource for detailing the trade-offs between consistency and performance, particularly in scenarios involving large-scale data analytics and machine learning workflows.

Optimizing Performance

Beyond the architecture, distributed systems can further optimize their performance through improved latency reduction, throughput maximization, and resource allocation strategies.

Latency Reduction Techniques

Reducing latency in distributed systems can be easily achieved through connection pooling and strategically locating data close to processing nodes. Optimal data partitioning and caching mechanisms enable even better network optimization, resulting in reduced network communication overhead. Maintaining low latency is crucial for any high-volume project, such as AI model training, to ensure seamless data synchronization and optimal performance. Distributed caching systems, such as Redis or DragonflyDB, are ideal for providing shared cached layers across multiple application layers.

Throughput Maximization

Google’s MapReduce demonstrates two key components of throughput maximization, which help optimize computational and communication bottlenecks. Batch processing and pipeline parallelism. They enable the amortization of communication costs across multiple operations and allow for different stages of computation to execute simultaneously. These two components help dramatically optimize network performance, increase cost-efficiencies, and are ideally situated within distributed networks.

Dynamic Resource Allocation

One of the most overlooked benefits of distributed computing systems is their ability to adjust in real-time for scalability, both in pricing and resource allocation. io.net excels in this area by providing a dynamic marketplace that offers users full visibility and access to their GPU clusters. Dynamic scaling enables systems to allocate resources according to current demand without being locked into future contract obligations, while container orchestration platforms, such as Kubernetes, offer sophisticated resource management capabilities that are essential for modern distributed systems.

Additional Considerations for AI/ML Workloads

AI and ML workloads require additional consideration when developing across distributed architecture systems. In addition to the architecture parameters outlined above, they must also account for issues related to training distribution, inference scaling, and compute orchestration. Fortunately, several real-world use cases have already successfully navigated these additional hurdles, and platforms, such as io.net Intelligence, offer a full suite of AI and ML workload services on distributed network infrastructure.

Training Distribution Strategies

Training distribution strategies on these networks must account for gradient synchronization, parameter server architectures, and parallelism techniques. The NVIDIA AI Enterprise Multi-Node Deep Learning Training Guide can guide developers when implementing distributed training across GPU clusters. A clear example of a company already implementing these strategies across distributed networks is Tesla's distributed training infrastructure. It demonstrates how automotive companies can leverage distributed computing to process vast amounts of sensor data for the development of autonomous vehicles.

Compute Orchestration

Compute orchestration becomes increasingly complex for AI/ML workloads as they scale. The management and coordination of heterogeneous GPUs, TPUs, and highly specialized AI accelerators require more sophisticated scheduling and resource allocation algorithms. The NVIDIA Data Parallelism Training Course claims that proper orchestration can improve the training efficiency of multi-node environments by up to 90%. Platforms like io.cloud simplify the deployment and management process for distributed AI infrastructure, allowing teams to run their models without the burdensome overhead of navigating these complexities.

Implementation Frameworks and Best Practices

The successful implementation of a distributed system requires a structured approach to decision-making, as well as the establishment of clear baseline measurements.

Architecture Decision Frameworks

Architecture decision frameworks should evaluate workload demands, scalability requirements, consistency needs, and operational complexity when choosing between different distributed patterns. Examining the Apache Kafka Streams Architecture Documentation can provide valuable insights into how to make these decisions for stream processing applications.

Performance Benchmarking

At the same time, performance benchmarking metrics can provide clearly defined objective measures for assessing a system’s execution efficiency. Simple KPIs that can be used for performance benchmarking include:

- Throughput (operations per second)

- Latency (response time)

- Resource Utilization (CPU, memory, network)

- Cost efficiency (performance per dollar).

Navigating Scaling Bottlenecks

It is also important to understand that even after optimizing a distributed system for its architecture and performance strategies, it will still often encounter scaling bottlenecks. By monitoring database connection limits, network bandwidth constraints, and coordination overhead, these bottlenecks can be easily mitigated, preventing performance degradation and system failures. Further implementing auto-scaling policies, resource quotas, and cost monitoring allows these systems to remain both performant and economically viable as they scale.

Distributed Computing Architecture Overview

The compute workload that will be required to build out the future of AI/ML’s foundation will be powered by distributed computing architecture that can handle their enterprise-level workloads efficiently. By understanding fundamental patterns, implementing sound design principles, and applying the optimization techniques outlined above, organizations can develop robust, scalable systems that easily adapt to increasing demand.

More than anything, what should be taken away from this overview is the knowledge that distributed systems are a nuanced balancing act of competing factors—performance versus cost, consistency versus availability, and complexity versus maintainability. Fortunately, modern solutions like io.intelligence provide platforms that help abstract the complexity of navigating these challenges while still maintaining the flexibility required to support diverse AI/ML applications. Whatever model or platform is chosen to develop distributed computing infrastructure, it is clear that understanding the technology will be essential for organizations looking to maintain competitive advantages through superior computational capabilities.

.png)

.svg)

.png)