Building machine learning models is easier than ever, but the reality is that 80% never reach production. And it's not due to algorithm complexity or the model itself, but infrastructure costs and deployment bottlenecks that traditional cloud providers can't solve. If AI models are a rocketship, current cloud providers are more slingshot than launchpad.

Startups routinely burn $50,000+ monthly serving models designed to be lean, while enterprise-focused MLOps solutions ignore the resource constraints that kill most AI initiatives before they deliver value. That's all changing with the emerging era of decentralized compute, where you can swap out the cloud mothership for a swarm of agile, responsive GPU drones.

This guide reveals how decentralized compute platforms like io.cloud slash infrastructure costs by up to 70% while maintaining enterprise-grade performance. For AI teams serious about scaling efficiently, mastering these infrastructure strategies is the difference between prototype and production success.

What is MLOps?

MLOps (Machine Learning Operations) is the practice of deploying and maintaining machine learning models in production reliably and efficiently.

It combines machine learning, DevOps, and data engineering to create automated workflows that manage the entire ML lifecycle. That includes everything from data preparation and model training to deployment, monitoring, and retraining.

Unlike traditional software development, MLOps must handle the unique challenges of machine learning: data versioning, experiment tracking, model drift detection, and the complex infrastructure requirements of GPU-intensive workloads. It provides the operational framework that transforms experimental models into production-ready AI systems.

How MLOps Bridges ML and Operations

MLOps serves as the critical bridge between data science teams who build models and engineering teams who deploy and maintain them in production. Traditional handoffs between these teams often fail because:

- Data scientists optimize for model accuracy and experimentation velocity

- Engineers prioritize system reliability, scalability, and cost efficiency

- Different toolsets create friction when transitioning from notebooks to production

MLOps eliminates this friction by establishing shared workflows, standardized deployment practices, and unified monitoring systems. It enables data scientists to deploy models directly while giving engineers the operational controls they need for production systems.

Purpose of MLOps in the ML Lifecycle

MLOps provides structure, repeatability, and reliability across every stage of machine learning development:

- Development: Reproducible environments and experiment tracking

- Training: Scalable compute orchestration and resource management

- Deployment: Automated model serving with version control

- Monitoring: Performance tracking and drift detection

- Maintenance: Automated retraining and model updates

Without MLOps, teams face the "model graveyard" problem where perfectly functional models never reach users due to deployment complexity and infrastructure costs.

This guide reveals how decentralized compute platforms like io.cloud slash infrastructure costs by up to 70% while maintaining enterprise-grade performance. For AI teams serious about scaling efficiently, mastering these infrastructure strategies is the difference between prototype and production success.

1. Why Most MLOps Implementations Fail

The real challenge isn't building better models. It's the invisible cost structure that kills the majority of ML projects before they ever serve a single user. Model quality improves daily thanks to open weights, community research, and robust tooling. The real bottleneck is cost. Specifically, the cost of production deployment.

It's tempting to blame model performance for stalled ML initiatives. But the data tells a different story. According to recent industry surveys, infrastructure costs represent 50% of ML project failures, followed by workflow complexity (30%) and organizational misalignment (20%). The models work, but the economics don't.

Beyond the Model: The Hidden Cost of “Going Live”

Training the model is relatively low cost. But once training is complete, that’s when the bill starts adding up. That’s due to the hefty overhead often required by production deployment, including many of the following costs:

- GPU infrastructure for inference at scale

- Auto-scaling systems to handle traffic spikes

- Storage solutions for model artifacts and logging

- Orchestration platforms like Kubernetes

- Observability tools for monitoring and alerting

- CI/CD pipelines for model versioning and deployment

- Latency optimization across geographic regions

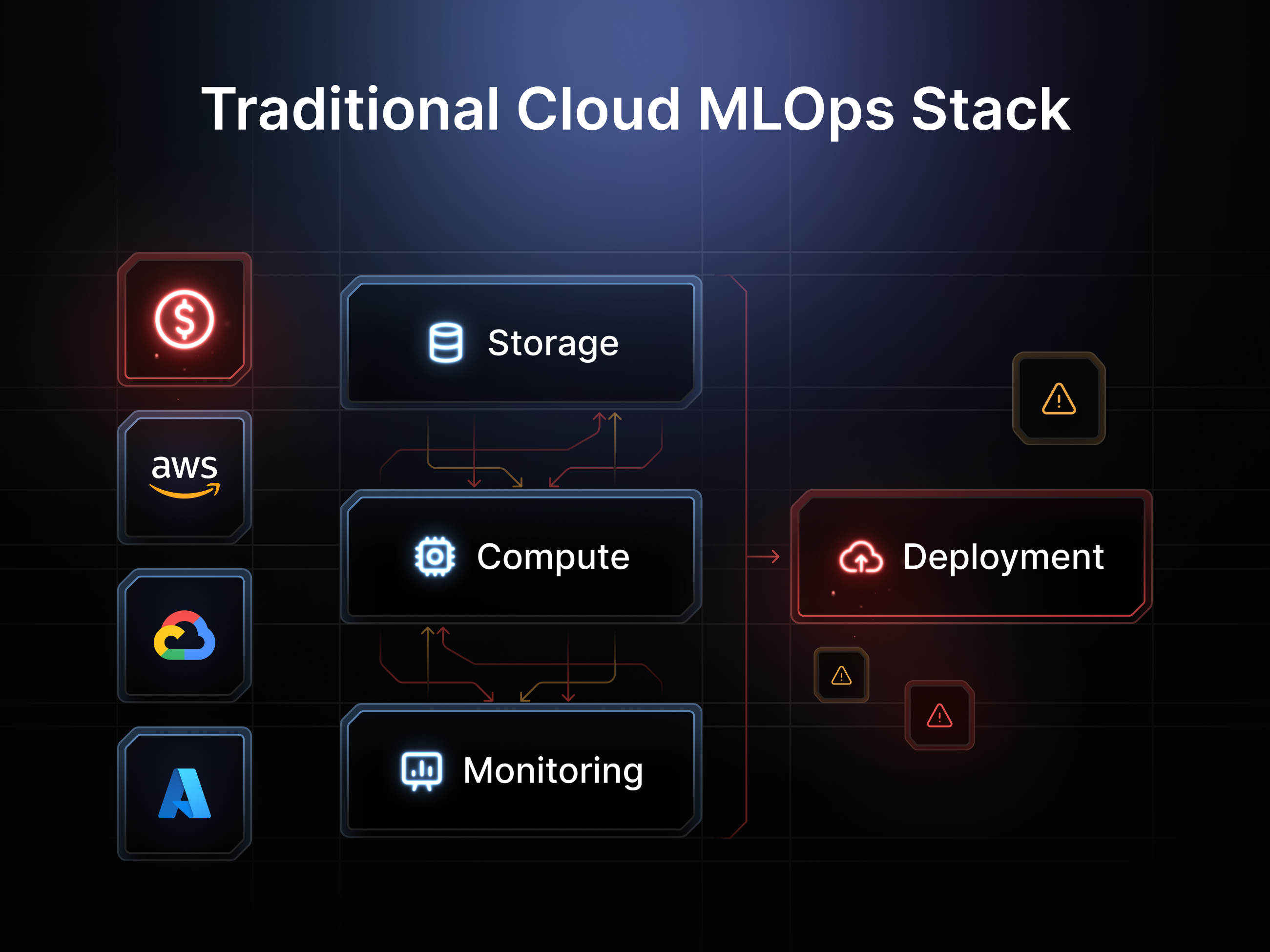

For resource-constrained teams, this defaults to enlisting major cloud providers. But AWS, GCP, and Azure weren't built for affordability. They were built for enterprise scale. The more teams rely on these platforms, the less transparent and more costly they get.

The MLOps Infrastructure Challenge

MLOps exists to solve a fundamental gap in machine learning: the journey from experimental model to reliable production system.

While data scientists excel at building accurate models in controlled environments, operationalizing these models requires an entirely different set of capabilities:

- Reliability: Models must handle real-world traffic patterns and edge cases

- Scalability: Systems must adapt to varying demand without manual intervention

- Maintainability: Models need monitoring, updates, and retraining as data evolves

- Reproducibility: Deployments must be consistent across environments and teams

This operational complexity is where most ML projects fail. It's not enough to have a working model. You need infrastructure that can serve it reliably, scale it efficiently, and maintain it over time.

Cost Breakdown: A Runaway Spiral

Cloud-native MLOps creates a dangerous feedback loop that costs precious time, money, and resources. The consequences typically entail:

- Auto-scaling inference endpoints that balloon during traffic spikes

- GPU instances accumulating hourly charges while sitting idle

- Data storage costs growing faster than teams can audit

- Monitoring tools charging per metric, trace, and log line

Layer in orchestration overhead from Kubernetes management and workflow tools like Apache Airflow, and monthly bills can cross $50,000 before teams understand where the money went.

Primary failure reasons based on the research:

- Lack of stakeholder support and planning - Survey Surfaces High Rates of AI Project Failure (top reason according to Siegel's survey)

- Inadequate infrastructure and MLOps capabilities - What is your biggest machine learning project failure? - Quora (RAND Corporation research)

- Data quality and integration issues NTT DATAOteemo (multiple academic sources)

Case Study: The $50K Monthly Reality

Consider a Series A AI startup deploying a customer service chatbot using an open-source NLP model. They chose AWS for their inference pipeline with autoscaling, logging, and high availability. Within eight weeks, infrastructure costs hit $50,000 monthly.

The model itself was efficient, being a lightweight transformer requiring minimal compute. But AWS's hourly billing for expensive instances, combined with data transfer fees, load balancing costs, and Kubernetes management overhead, created an unsustainable burn rate.

Unfortunately, this type of scenario isn't an outlier. It's the standard pattern across the MLOps ecosystem: startups spending like Fortune 500 companies on infrastructure they barely understand and rarely fully utilize.

The io.cloud Alternative: Decentralized GPU Infrastructure

The good news is that io.cloud fundamentally reimagines MLOps economics through decentralized compute. Instead of provisioning expensive, fixed capacity from hyperscalers, teams access a global network of underutilized GPUs on demand.

Key advantages:

- 70% cost reduction compared to equivalent AWS/GCP workloads

- Dynamic resource allocation without idle time charges

- Geographic distribution for reduced latency

- Vendor independence preventing lock-in

Cost Comparison Example:

While actual pricing depends on workload type, runtime, and scaling policies, publicly available data shows a clear cost gap between centralized cloud GPU infrastructure and decentralized compute networks.

AWS costs vary by service and model type:

- For Stable Diffusion inference on AWS SageMaker, a representative production scenario (8 hours/day, 20 days/month) runs about $310/month, or ~$0.85/hour per endpoint. This figure includes autoscaling, storage, and data egress across services like SageMaker, S3, and Lambda.

- For LLaMA-2 inference via AWS Bedrock, published pricing shows rates around $21+/hour per model unit without commitment.

io.cloud all-in pricing is significantly lower:

- Around $0.22/hour for serving similar models with autoscaling, storage, and egress included.

- Headline GPU pricing of $2.19/hour for NVIDIA H100 vs $12.29/hour on AWS.

Note: Actual costs depend on specific instance types, regions, and usage patterns. Teams should benchmark their specific workloads to determine potential savings.

Beyond Cost: Solving Centralization Bottlenecks

Decentralized infrastructure available via io.cloud addresses production challenges that centralized clouds can't, such as:

- Latency optimization: Deploy edge inference nodes closer to users

- Vendor risk mitigation: Route workloads dynamically across providers

- Flexible provisioning: Spin up resources per task, not in advance

- Transparent pricing: Pay only for actual compute time used

Centralized infrastructure favors predictable enterprise workloads. But AI startups need flexibility, pricing transparency, and rapid iteration capability. And that’s exactly what decentralized compute and io.cloud provides.

MLOps vs. DevOps

While MLOps builds on many of the same principles as DevOps, the two disciplines address different challenges. Understanding where they overlap — and where they diverge — is key to choosing the right processes and tools for your AI projects.

What Is DevOps?

DevOps is a set of practices and cultural principles that brings development and operations teams together to build, test, and ship software faster and more reliably. It emphasizes automation, tight feedback loops, and shared ownership so that code moves from idea to production with predictable quality and minimal friction.

Core principles:

- CI/CD (Continuous Integration / Continuous Delivery): Developers continuously merge code changes into a shared repository (CI) where automated tests run; successful builds are then automatically prepared for release and pushed to production or staging environments (CD).

- Automation: Reduce manual, error-prone steps (testing, builds, deployments) to increase speed and consistency.

- Collaboration: Cross-functional teams share responsibility for the entire delivery lifecycle, breaking down silos between dev and ops.

- Monitoring & Feedback: Instrumentation and observability provide rapid feedback on performance and failures so teams can iterate quickly.

What Is MLOps?

MLOps is short for Machine Learning Operations. Fundamentally, MLOps is the adaptation and extension of DevOps principles to the unique demands of machine learning projects. While it shares DevOps’ focus on automation, collaboration, and continuous delivery, MLOps must account for far more than application code.

In practice, MLOps encompasses the entire ML lifecycle, including:

- Data management: Versioning, quality control, and lineage tracking to ensure models are trained on consistent, trustworthy datasets.

- Model training and validation: Automating experimentation, hyperparameter tuning, and evaluation to select the best-performing models.

- Deployment: Packaging and serving models in production environments, often as APIs or integrated components of larger systems.

- Monitoring: Tracking both technical performance (latency, uptime) and predictive quality (accuracy, drift) in real time.

- Retraining: Updating or replacing models as new data arrives or as performance degrades, ensuring continued relevance and compliance.

MLOps addresses additional dimensions, focusing on the interplay between code, data, and models. This approach enables machine learning systems to be developed, deployed, and maintained with the same rigor and speed as modern software applications.

How DevOps and MLOps Are Similar

Both DevOps and MLOps share the common goal of streamlining the path from development to production. They focus on reducing friction and accelerating delivery to bring reliable software and machine learning models into real-world use more quickly. Both disciplines rely heavily on automation, version control, and continuous monitoring to ensure systems remain stable, consistent, and agile throughout their lifecycle. These practices help teams detect issues early and iterate rapidly.

DevOps and MLOps also both emphasize cross-team collaboration. DevOps breaks down silos between development and operations teams, while MLOps bridges the gap between data scientists and engineering teams, fostering shared ownership and coordinated workflows.

Critical Differences

Where MLOps Extends DevOps

MLOps builds on the foundation of DevOps by addressing additional requirements unique to machine learning projects. These include:

- Data versioning and lineage: Tracking changes to datasets over time and understanding the data’s origin and transformations.

- Experiment tracking and reproducibility: Managing model training experiments to ensure results can be reliably reproduced and compared.

- Automated model retraining and validation: Continuously updating models as new data becomes available and validating their performance before deployment.

- Specialized monitoring for model accuracy and drift: Going beyond system uptime to monitor how model predictions perform in production and detecting shifts in data.

Together, these two practices let teams maintain robust, accurate, and compliant ML systems throughout their lifecycle.

In a typical DevOps scenario, teams deploy a web application, monitor its uptime and performance, and roll out updates regularly using continuous integration and continuous delivery (CI/CD) pipelines. The focus is on ensuring the application runs smoothly and that new code changes are released reliably.

In contrast, an MLOps scenario involves deploying a machine learning model as an API endpoint. Beyond monitoring technical performance like latency and uptime, teams also track prediction accuracy and detect data drift. Data drift causes changes in input data that can reduce overall model effectiveness.

When new data becomes available or model performance declines, the model is retrained, validated, and updated seamlessly, ensuring it continues to deliver accurate results in production.

Why the Distinction Matters

Understanding the differences between DevOps and MLOps is essential for selecting the right tools, processes, and workflows tailored to machine learning projects. Applying DevOps practices alone to ML initiatives often overlooks critical needs around data management, model retraining, and governance.

This gap can result in failed deployments, unreliable AI systems, and missed business value. By recognizing where MLOps extends and differs from DevOps, teams can build scalable, maintainable, and trustworthy machine learning solutions.

2. MLOps Infrastructure Blueprint for Resource-Constrained Teams

The new playbook for lean AI teams centers on intelligent architecture. It’s about combining open-source orchestration, container-native workflows, and decentralized compute for optimal performance and cost-efficiency. The components are proven, but what's changing is where and how they execute.

The Core Stack: From Idea to Production

Every production MLOps pipeline requires these foundational elements:

- Compute orchestration: Kubernetes, Ray, or Airflow for scheduling and scaling

- Model versioning: MLflow, Weights & Biases, or DVC for reproducibility

- Data pipelines: Spark, KubeFlow, or cloud-native tools for ETL workflows

- CI/CD automation: GitHub Actions or GitLab for deployment pipelines

Most teams cobble these together initially, but complexity and cost spike when defaulting to cloud infrastructure for execution.

Why Centralized Compute Fails the GPU Era

Public clouds excel at general-purpose computing but become expensive traps for AI workloads. GPU-specific scheduling, unpredictable egress fees, and lack of fine-grained cost controls create the following pattern:

- Local training on small cloud instances

- Managed deployment via SageMaker, Vertex AI, etc.

- Autoscaling policies for traffic growth

- Cost explosion with no clear attribution

This structure fundamentally misaligns with modern AI workloads where GPU utilization, cold start times, and latency matter more than traditional metrics like instance uptime.

Decentralized GPU Architecture

Today, io.cloud offers a fundamentally different cost model. Instead of provisioning fixed hyperscaler capacity, teams tap into distributed, independently-operated GPU networks.

How it works:

- Container-native interface: Deploy using standard Docker images

- Ray integration: Full support for distributed training and inference

- Hybrid flexibility: Use on-demand or integrate with existing cloud setup

- Dynamic scaling: Resources allocated per workload, not reserved

This enables the shift from static cloud-first models to true compute-on-demand. The io.cloud framework is especially valuable for inference workloads with unpredictable traffic or training jobs that don't justify long-running clusters.

Qualitative Benefits Without Lock-In

Decentralized GPU architecture delivers significantly reduced cost per GPU-hour compared to major cloud platforms. That’s due to several key factors:

- No cloud overhead: Eliminate markup on idle or underutilized resources

- Hardware choice: Select optimal GPU types (A100s vs L40s vs RTX 3090s) per workload

- Vendor independence: Avoid lock-in while optimizing for actual needs

- Cost transparency: Clear per-GPU-hour pricing without hidden fees

Without needing to reference proprietary pricing, decentralized compute enables more control, flexibility, and transparency.

Example Workloads: Training vs Inference

Here are two common use cases that illustrate the contrast between traditional cloud and decentralized compute:

Scenario 1: Distributed Model Training

A team training a document classification model needs 4x A100s for 3 weeks. Instead of locking into cloud capacity, they run containerized Ray jobs on io.cloud's idle A100 network. When training completes, no ongoing costs remain.

Scenario 2: High-Frequency Inference

An AI agent startup deploys multiple lightweight LLMs for real-time user queries. Rather than always-on managed endpoints, they run containerized inference pods across distributed nodes. Models spin up in seconds, get billed per batch, and are torn down automatically.

Both scenarios demonstrate how workload-specific provisioning beats generic cloud abstractions.

Architecture Patterns: Startup vs Enterprise

This modularity supports two distinct approaches:

Startup Stack: Velocity > Stability

- Orchestration: Ray or lightweight Kubernetes

- Versioning: MLflow or Weights & Biases

- Deployment: GitHub Actions + io.cloud container runner

- Compute: Fully decentralized

Ideal for rapid iteration and MVP launches with zero DevOps overhead and linear cost scaling over time.

Enterprise Stack: Control > Agility

- Orchestration: Managed Kubernetes (EKS/GKE) + Ray hybrid nodes

- Versioning: W&B, DVC, plus compliance layers

- Deployment: GitOps with full CI/CD pipelines

- Compute: Hybrid cloud + io.cloud for overflow

Perfect for teams with production SLAs, model governance requirements, and budget optimization mandates.

3. From Development to Production: Step-by-Step MLOps Pipeline

Implementing MLOps in production is rarely plug-and-play, especially for teams with limited time, budget, or infrastructure expertise. Moving from experimentation to deployment requires a systematic approach that balances development speed with operational reliability.

This section outlines how to build a scalable, production-grade MLOps pipeline using io.net’s intelligent stack. From development and training to orchestration, deployment, and monitoring, each phase can plug into your existing toolchain while simplifying infrastructure complexity.

Whether you're a solo developer starting with local-to-cloud workflows or a startup managing multi-model inference at scale, this guide breaks down the core phases, tools, and strategies that make efficient MLOps possible. All without heavy DevOps overhead.

Below, you’ll find a visual overview of the full pipeline and see how io.cloud and io.intelligence power each layer with practical code examples and real-world deployment patterns.

Phase 1: Local Development and Data Versioning

MLOps success starts with reproducible development and portable workflows from day one. Begin by prototyping models on local hardware, but do so with an eye toward scaling. The goal is to establish patterns early that will seamlessly carry over into production, ensuring consistency, reliability, and efficiency as your pipeline grows.

Setting Up Reproducible Environments

Begin with containerized development environments that mirror your production setup. This eliminates the "works on my machine" problem that derails many MLOps initiatives.

FROM python:3.9-slim

WORKDIR /app

RUN pip install mlflow wandb dvc pytorch

COPY . .Data Pipeline Foundation

Use DVC for data versioning alongside your model code. This creates a single source of truth for both datasets and model artifacts.

# dvc.yaml

stages:

preprocess:

cmd: python src/preprocess.py

outs: [data/processed/]

train:

cmd: python src/train.py

deps: [data/processed/, src/train.py]

outs: [models/model.pkl]

metrics: [metrics/train_metrics.json]

Experiment Tracking Setup

Integrate MLflow early to track experiments and ensure model reproducibility:

# src/train.py

import mlflow

def train_model(config):

with mlflow.start_run():

mlflow.log_params(config)

model = YourModel(**config)

for epoch in range(config['epochs']):

train_loss = train_epoch(model)

mlflow.log_metric('train_loss', train_loss, step=epoch)

mlflow.pytorch.log_model(model, "model")

return model

Key principles:

- Design with portability from the start

- Align local environments with production containers

- Track all hyperparameters, metrics, and artifacts

Phase 2: Distributed Training with io.cloud

Once your model is validated locally, you can scale training using Ray on io.cloud with minimal code changes. If portability was built in from the start, transitioning from local to distributed execution becomes fast and frictionless.

Ray Integration for Distributed Training

# src/distributed_train.py

import ray

from ray.train import ScalingConfig

def distributed_training():

ray.init(address="ray://io-cloud-cluster:10001")

scaling_config = ScalingConfig(

num_workers=4,

use_gpu=True,

resources_per_worker={"GPU": 1}

)

trainer = train.DataParallelTrainer(

train_loop_per_worker=your_train_function,

scaling_config=scaling_config

)

return trainer.fit()

io.cloud Job Configuration

Configure your training job with YAML templates for easy repeatability and cost control:

# io_cloud_job.yaml

apiVersion: batch/v1

kind: Job

spec:

template:

spec:

containers:

- name: training

image: your-registry/ml-training:latest

resources:

requests:

nvidia.com/gpu: 4

memory: "32Gi"

env:

- name: MAX_COST_PER_HOUR

value: "50"

command: ["python", "src/distributed_train.py"]

Benefits:

- Ray abstracts GPU cluster complexity

- Container execution ensures cross-environment reproducibility

- Built-in cost controls prevent runaway spending

- Access to high-end GPUs without long-term commitments

Phase 3: Production Deployment with io.intelligence

Deploy trained models to production using io.intelligence, which offers dynamic scaling, intelligent traffic routing, and cost monitoring. The platform supports both REST APIs and streaming interfaces to match your specific use case.

Model Serving Configuration

# src/model_server.py

from fastapi import FastAPI

import mlflow.pytorch

app = FastAPI()

model = None

@app.on_event("startup")

async def load_model():

global model

model = mlflow.pytorch.load_model("models:/your-model/production")

@app.post("/predict")

async def predict(features: list):

prediction = model(torch.tensor(features))

return {"prediction": prediction.item()}

@app.get("/health")

async def health():

return {"status": "healthy"}

Deployment with Auto-scaling

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

spec:

replicas: 2

template:

spec:

containers:

- name: server

image: your-registry/model-server:latest

resources:

requests:

nvidia.com/gpu: 1

memory: "2Gi"

env:

- name: COST_LIMIT_PER_HOUR

value: "10"

Benefits:

- Dynamic scaling adapts to real-time demand

- Smart traffic routing optimizes performance across replicas

- Built-in cost controls cap GPU spend per hour

- Supports both REST and streaming APIs for flexible integration

Phase 4: Monitoring and Cost-Aware Optimization

Production systems must balance performance and cost. io.intelligence enables this with real-time monitoring of latency, throughput, and spend, along with automated optimization to keep deployments efficient.

Cost-Aware Monitoring Setup

# src/monitoring.py

from prometheus_client import Counter, Histogram, Gauge

REQUEST_COUNT = Counter('requests_total', 'Total requests')

REQUEST_LATENCY = Histogram('request_duration_seconds', 'Latency')

COST_PER_INFERENCE = Gauge('inference_cost_usd', 'Cost per inference')

class CostMonitor:

def __init__(self, cost_limit=50):

self.cost_limit = cost_limit

self.hourly_cost = 0

def track_inference(self, cost, latency):

REQUEST_COUNT.inc()

REQUEST_LATENCY.observe(latency)

COST_PER_INFERENCE.set(cost)

self.hourly_cost += cost

if self.hourly_cost > self.cost_limit:

self.alert_cost_exceeded()

Benefits:

- Real-time tracking of latency, throughput, and cost per inference

- Prometheus integration enables transparent monitoring at every endpoint

- Customizable cost limits help prevent budget overruns

- Automated alerts support proactive optimization and scaling decisions

Phase 5: A/B Testing and Canary Deployments

Minimize risk when deploying new models by using progressive delivery strategies with automated rollback capabilities.

A/B Testing Implementation

# src/ab_testing.py

import random

class ABTestRouter:

def __init__(self, canary_percentage=10):

self.canary_percentage = canary_percentage

self.metrics = {'stable': [], 'canary': []}

def route_request(self):

if random.randint(1, 100) <= self.canary_percentage:

return 'canary'

return 'stable'

def should_rollback(self):

if len(self.metrics['canary']) < 100:

return False

stable_avg = sum(self.metrics['stable']) / len(self.metrics['stable'])

canary_avg = sum(self.metrics['canary']) / len(self.metrics['canary'])

return canary_avg > stable_avg * 1.2 # 20% worse performance

Benefits:

- Progressive delivery reduces risk when launching new models

- Canary routing allows real-world testing on a small traffic slice

- Performance-based rollback ensures only stable versions go live

- Customizable thresholds let teams fine-tune rollout sensitivity

Phase 6: Multi-Model Orchestration and Cost Optimization

Once multiple models are in production, scale effectively using io.intelligence’s Model & Agent Marketplace for intelligent orchestration and cost-based routing.

Dynamic Model Selection

# src/multi_model_orchestration.py

class ModelOrchestrator:

def __init__(self):

self.models = {

'fast': {'cost': 0.001, 'latency': 50, 'accuracy': 0.89},

'accurate': {'cost': 0.01, 'latency': 200, 'accuracy': 0.95},

'premium': {'cost': 0.05, 'latency': 1000, 'accuracy': 0.97}

}

def select_model(self, max_cost=float('inf'), max_latency=float('inf')):

viable = [name for name, specs in self.models.items()

if specs['cost'] <= max_cost and specs['latency'] <= max_latency]

if not viable:

return 'fast' # Fallback

# Return most accurate viable model

return max(viable, key=lambda x: self.models[x]['accuracy'])

Benefits:

- Efficiently orchestrate multiple models with intelligent routing

- Optimize for cost, latency, or accuracy based on real-time needs

- Dynamically select the best model to balance performance and budget

- Fallback logic ensures continuous service even under constraints

This implementation provides a complete, cost-conscious MLOps pipeline that scales from solo development to production deployment. The key differentiator is integrated cost monitoring and optimization at every stage, enabled by io.cloud's decentralized infrastructure.

Key takeaways for implementation:

- Start with reproducible, containerized development environments

- Use Ray for seamless scaling from local to distributed training

- Implement cost-aware monitoring from day one

- Build progressive delivery strategies to minimize deployment risk

- Leverage multi-model orchestration for optimal cost-performance tradeoffs

4. Essential MLOps Stack for Modern AI Teams

Successful MLOps requires choosing the right tools to orchestrate, monitor, and govern the full lifecycle of machine learning models. As AI teams scale, these tools must support collaboration, reproducibility, observability, and budget accountability.

This section covers the core open-source components that power modern pipelines and demonstrates how io.net’s intelligent infrastructure integrates seamlessly. The result is that teams have the freedom to build modular stacks without vendor lock-in.

Open-Source Tools That Power Scalable MLOps

Modern MLOps relies on battle-tested open-source components that teams can mix and match without expensive licensing.

Kubeflow: Kubernetes-native framework for ML workflows. Enables teams to chain preprocessing, training, evaluation, and deployment into repeatable DAGs. Best for teams already running Kubernetes.

MLflow: Industry-standard experiment tracking, model packaging, and deployment. Logs parameters, metrics, and artifacts while providing central model registry for staging and promotion. Essential for model versioning across development stages.

Apache Airflow: Powers data and training pipelines with dynamic DAG generation, retry logic, and extensive integrations. Ideal for scheduling non-ML tasks including ETL and batch inference.

DVC: Data Version Control treats datasets and models like code, integrating with Git for hash-based tracking of data, configurations, and binaries. Critical for collaborative teams managing large datasets.

These tools excel when teams can choose compute infrastructure flexibly without rewriting pipelines for different environments.

Monitoring, Drift Detection, and Model Governance

Production model management extends beyond keeping endpoints online to detecting drift, tracking performance, and enforcing governance policies.

Essential monitoring stack:

- Prometheus + Grafana: Real-time metrics and dashboards

- Evidently AI: Drift detection and data quality monitoring

- Great Expectations: Input data validation before model inference

- ArgoCD: GitOps-style model delivery and rollback automation

Together, these tools support governance in regulated environments while enabling high-velocity iteration for agile teams.

io.intelligence: Native Integration Without Vendor Lock-In

The io.intelligence platform integrates cleanly into existing MLOps stacks as an orchestration and optimization layer rather than replacing established tools.

Integration examples:

- With MLflow: Use registered model URIs (models:/<model_name>/production) directly in FastAPI, Flask, or KServe containers. io.intelligence handles scaling and routing without changing model loading.

- With Kubeflow/Airflow: Replace cloud training operators with io.cloud job runners. Since jobs are containerized, swapping execution environments requires only YAML or DAG configuration changes.

- With ArgoCD/GitHub Actions: Package model deployments as versioned containers promoted via Git automation. io.intelligence reads standard manifests and provides real-time cost/performance observability.

- With Prometheus/Grafana: io.intelligence exports Prometheus-compatible metrics (latency, throughput, cost per inference) for existing dashboard integration for enhanced visibility.

Cost-Effective Alternatives to Enterprise MLOps Platforms

Many enterprise MLOps platforms charge high premiums for features like experiment tracking, autoscaling, and observability. Each of these capabilities can be replicated using open-source software combined with io.net’s infrastructure.

An open-core approach allows teams to avoid lock-in while still delivering enterprise-grade performance and governance.

Building a Composable MLOps Stack

Modern AI teams are increasingly adopting a composable MLOps approach. Builders in AI are choosing best-in-class tools for each function such as data, training, deployment, and monitoring, and connecting them through containerized, declarative workflows. io.net was built for this mindset.

Whether you use Argo for delivery or Ray for training, its decentralized GPU backbone and orchestration APIs let each layer operate independently while scaling seamlessly together.

Composable Stack Example:

# training_pipeline.yaml

steps:

- name: preprocess

operator: python

script: scripts/preprocess.py

- name: train

operator: io.cloud

image: your-registry/trainer:latest

resources:

gpus: 4

memory: 64Gi

constraints:

max_cost_per_hour: 30

- name: deploy

operator: io.intelligence

model: outputs/model.pkl

scaling:

min_replicas: 1

max_replicas: 10

target_latency_ms: 200

This is the future of MLOps is modular, open, cost-aware, and infrastructure-agnostic. The best stacks aren’t bought, they’re composed. With io.cloud and io.intelligence, AI teams can extend their favorite tools’ capabilities while cutting infrastructure costs and avoiding the lock-in that limits long-term agility.

Whether managing one model or one hundred, integrating with the right ecosystem unlocks scalability without compromise.

5. Scaling MLOps Without Breaking the Budget

Building an initial deployment pipeline is a major milestone, but true MLOps scalability demands advanced orchestration, cost awareness, and infrastructure control. As models multiply and inference workloads grow, teams face the challenge of expanding capabilities without letting costs spiral out of control. It’s a problem traditional cloud providers simply can’t solve.

This section explores how io.net’s decentralized infrastructure supports complex, multi-model workloads at a fraction of the typical cloud cost, without compromising security, observability, or performance.

Multi-Model Management Across Distributed Infrastructure

Many modern AI applications don’t rely on a single model. They combine LLMs, custom classifiers, vector databases, and lightweight agents into cohesive systems. Managing these models in production requires:

- Intelligent routing. Serving requests to the lowest-cost, highest-accuracy model for a given task.

- Dynamic composition. Combining outputs from multiple models (e.g., reranking LLM results with a trained classifier).

- Seamless model handoff. Managing transitions between models during real-time inference.

Using io.intelligence allows orchestration primitives to manage the above complexities natively. Developers can register multiple models, set routing policies, and define agent workflows that combine different model outputs. This abstracts away most of the infrastructure logic and enables low-latency, cost-aware model switching at scale.

Resource Optimization Patterns for Continuous Training

Frequent retraining is essential for models that rely on streaming data or evolve with user behavior. But it can quickly eat into compute budgets.

To optimize for continuous training, teams can adopt patterns such as:

- Incremental retraining. Updating only the parts of the model that change, rather than starting from scratch.

- Scheduled training windows. Running jobs during low-demand hours when compute is cheaper and more available.

- Training budget limits. Setting per-project or per-experiment caps on GPU-hour usage.

io.cloud supports these patterns with budget-aware job scheduling and integration with orchestration tools like Prefect or Airflow. By using predefined YAML templates, teams can define automated training pipelines that self-terminate when cost thresholds are met, enabling safe, scalable experimentation.

Leveraging Dynamic Scaling with io.cloud

Traditional cloud providers offer spot instances at reduced rates but with limited availability and unpredictable preemption. In contrast, io.cloud sources compute from a decentralized network of underutilized GPUs, delivering significantly lower costs per GPU-hour without sacrificing reliability.

With dynamic autoscaling on io.cloud, developers can:

- Automatically scale up training jobs across hundreds of GPUs when needed

- Fall back to lower-cost or lower-tier nodes when budgets tighten

- Mix high-performance and long-tail GPUs in a single job for optimal price-performance

This flexibility allows both startups and enterprises to train larger models and run frequent experiments while keeping cloud bills predictable and manageable.

Enterprise-Grade Security on Decentralized Infrastructure

For organizations in regulated industries or those handling sensitive data, decentralized infrastructure may raise concerns about privacy and compliance. io.net addresses these directly through:

- Secure enclave execution. Supporting trusted execution environments (TEEs) for encrypted data processing

- Data residency controls. Enforce geographic restrictions to ensure data and compute remain within specific jurisdictions for regulatory compliance

- Application auto-recovery. Automatic failover and state restoration when nodes fail, ensuring zero-downtime deployments without manual intervention

- Comprehensive Audit trails. Logging compute job activity for compliance and security review

- Node reputation scoring. Assigning trust levels to compute providers based on performance, uptime, and community validation

With features like governance, traceability, and reliability, io.cloud makes it viable to run enterprise-grade workloads on decentralized compute without compromise. Advanced MLOps is as much about control as it is about scale.

By combining multi-model orchestration, budget-aware training patterns, and decentralized compute economics, io.net empowers teams to build sophisticated AI systems without costly infrastructure lock-in or heavy DevOps overhead.

6. Solving Common MLOps Challenges

Even with a well-structured pipeline and scalable infrastructure, MLOps systems often face challenges like performance degradation, runaway costs, and operational complexity that only emerge at scale. io.net helps teams diagnose and address these pain points with built-in observability, cost controls, and flexible debugging tools designed for distributed workloads.

Performance Bottlenecks and Infrastructure Reliability

When model latency spikes or jobs fail intermittently, the root cause could be anything from noisy neighbors on shared GPUs to misconfigured batch sizes. With io.cloud, these issued are addressed and give teams visibility into:

- GPU-level performance metrics like memory usage, temperature, and utilization

- Node-level logs for each job, with full traceability and filtering by failure state

- Model performance analytics tracked over time to detect drift or regressions

When specific nodes consistently underperform or fail, io.cloud’s scheduler automatically blacklists them and reassigns workloads to maintain reliability without manual intervention. For mission-critical applications, developers can pin jobs to trusted nodes or use redundant inference across multiple GPUs to minimize downtime risk.

Cost Optimization Strategies for Long-Running Experiments

Training and fine-tuning large models can become a runaway expense. Especially when experiments run longer than expected or fail midway. Using io.cloud helps rein in costs through:

- Max runtime settings to cap jobs that exceed budget or time limits

- Spot instance prioritization to lower per-hour GPU cost without sacrificing capacity

- Usage visualizations that track GPU-hour burn by project, job, or user

These tools establish guardrails around experimentation, avoiding costly surprises without micromanaging every training run.

Debugging Distributed Training and Inference Issues

Distributed workloads create challenging debugging scenarios such as sync failures, deadlocks, or inconsistent gradients that are hard to reproduce locally. These challenges are simplified with tools provided by io.cloud, including:

- Per-node process logs: Real-time streaming capabilities

- Checkpoint snapshots: Rollback and replay for failure analysis

- Sidecar containers: Live debugging, profiling, and visualization support

Whether troubleshooting a finicky PyTorch DDP setup or investigating inference latency spikes under load, these tools enable rapid problem isolation without the need for a dedicated DevOps team.

Operational excellence in MLOps means identifying, preventing, and recovering from failures, and io.net’s observability and debugging features make this possible at scale—even across dynamic, decentralized infrastructure.

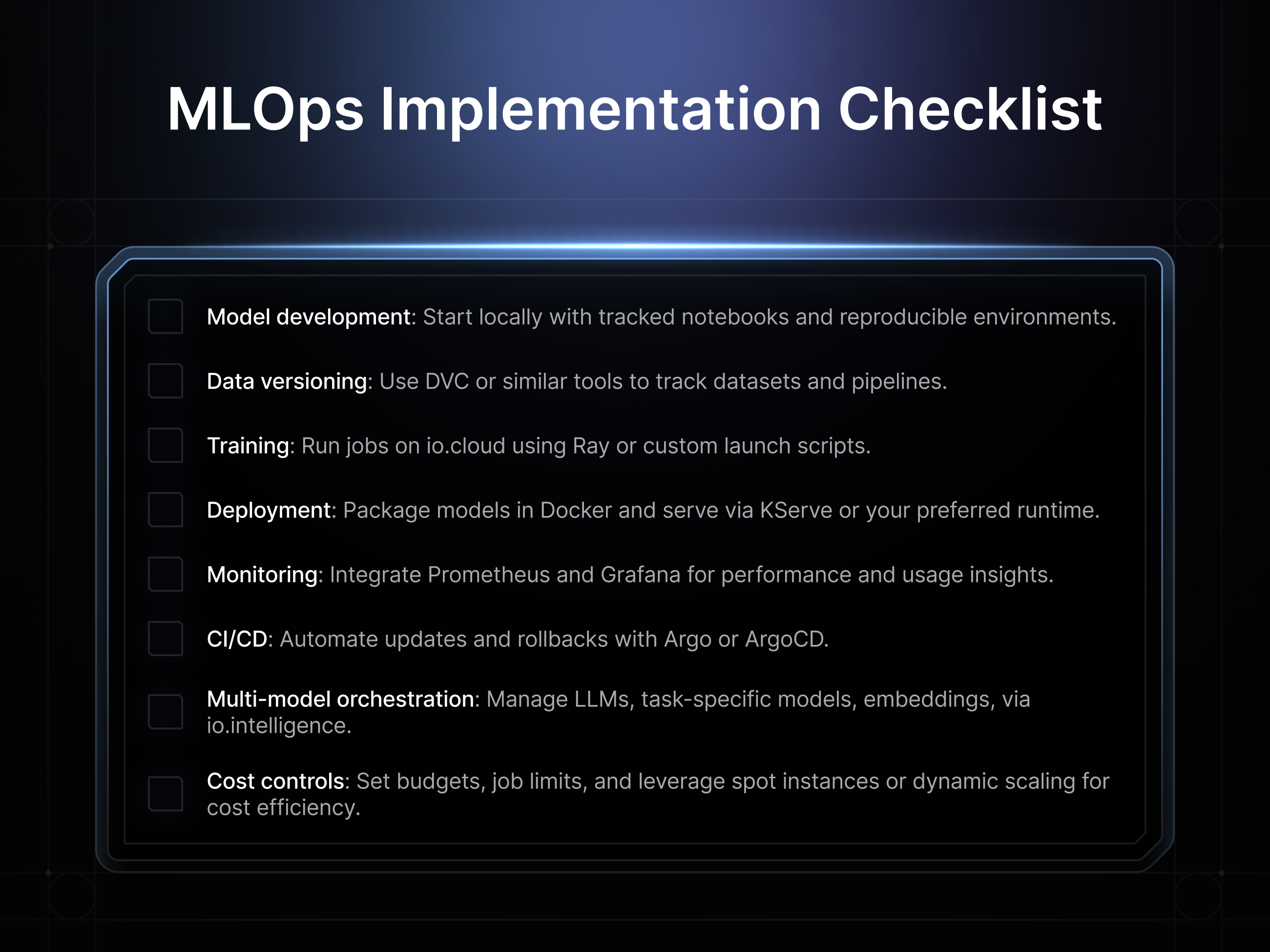

7. Your MLOps Implementation Checklist

Building production-grade machine learning systems doesn’t require full DevOps teams or complex infrastructure management. With modular pipelines and the right tools, even small teams can scale ML workflows efficiently and cost-effectively.

Getting Started

- Create io.cloud account and explore pre-configured YAML templates

- Launch your first training job using containerized Ray workflows

- Monitor everything from the unified dashboard with cost tracking

- Scale gradually from single models to multi-model orchestration

Whether scaling inference or optimizing model experimentation, io.net's intelligent compute layer keeps your MLOps journey lean, fast, and future-ready without the infrastructure complexity that kills most AI initiatives before they deliver value.

The bottom line: 80% of ML models fail due to infrastructure costs, not algorithm quality. With decentralized compute and cost-aware orchestration, your models can join the 20% that actually make it to production and deliver real value.

.png)

.png)

.svg)

.png)